A method for automatically generating motion data based on video motion estimation

A motion estimation and automatic generation technology, applied in the field of dynamic data, can solve the problem of high experience requirements of staff, achieve the effect of solving high experience requirements, ensuring accurate matching, and convenient and fast calculation process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 2

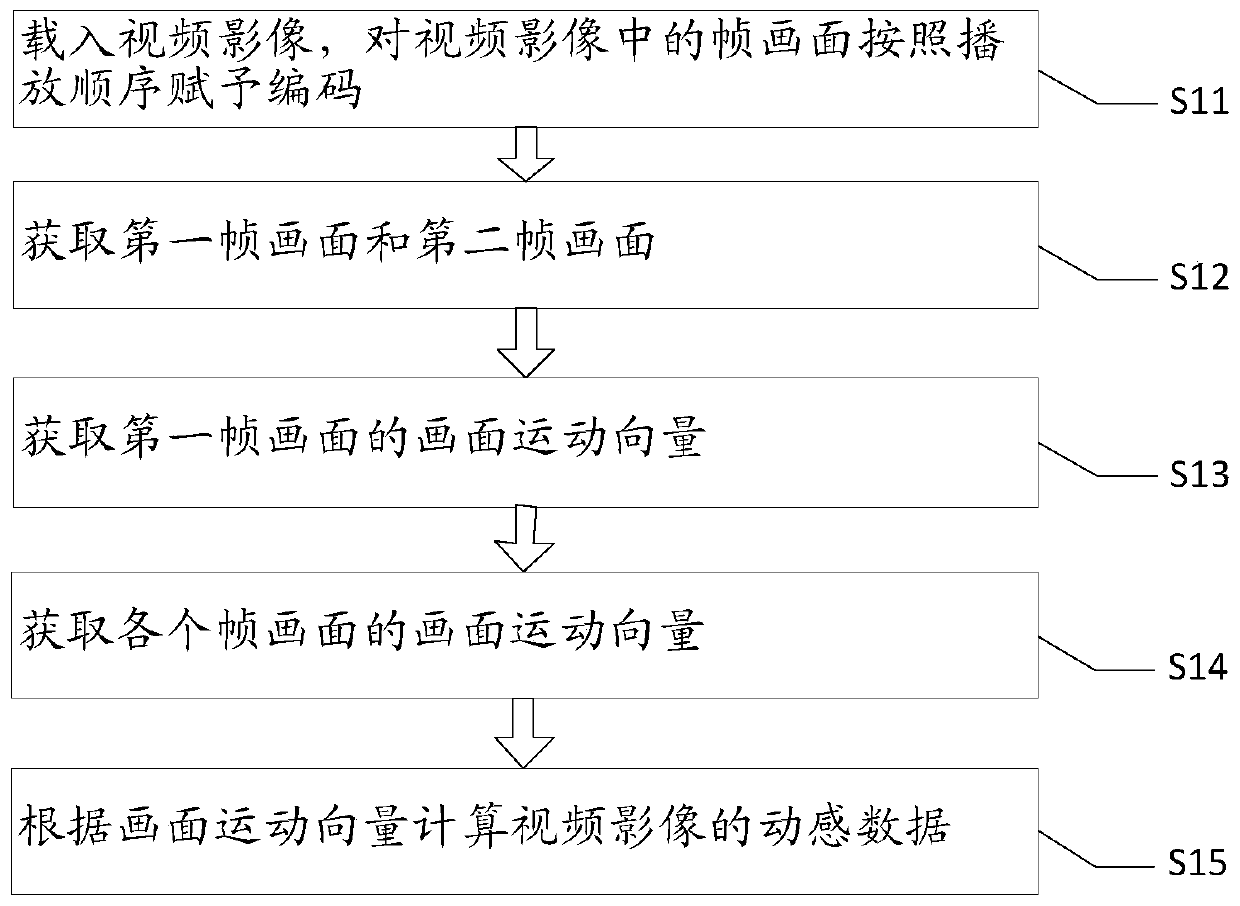

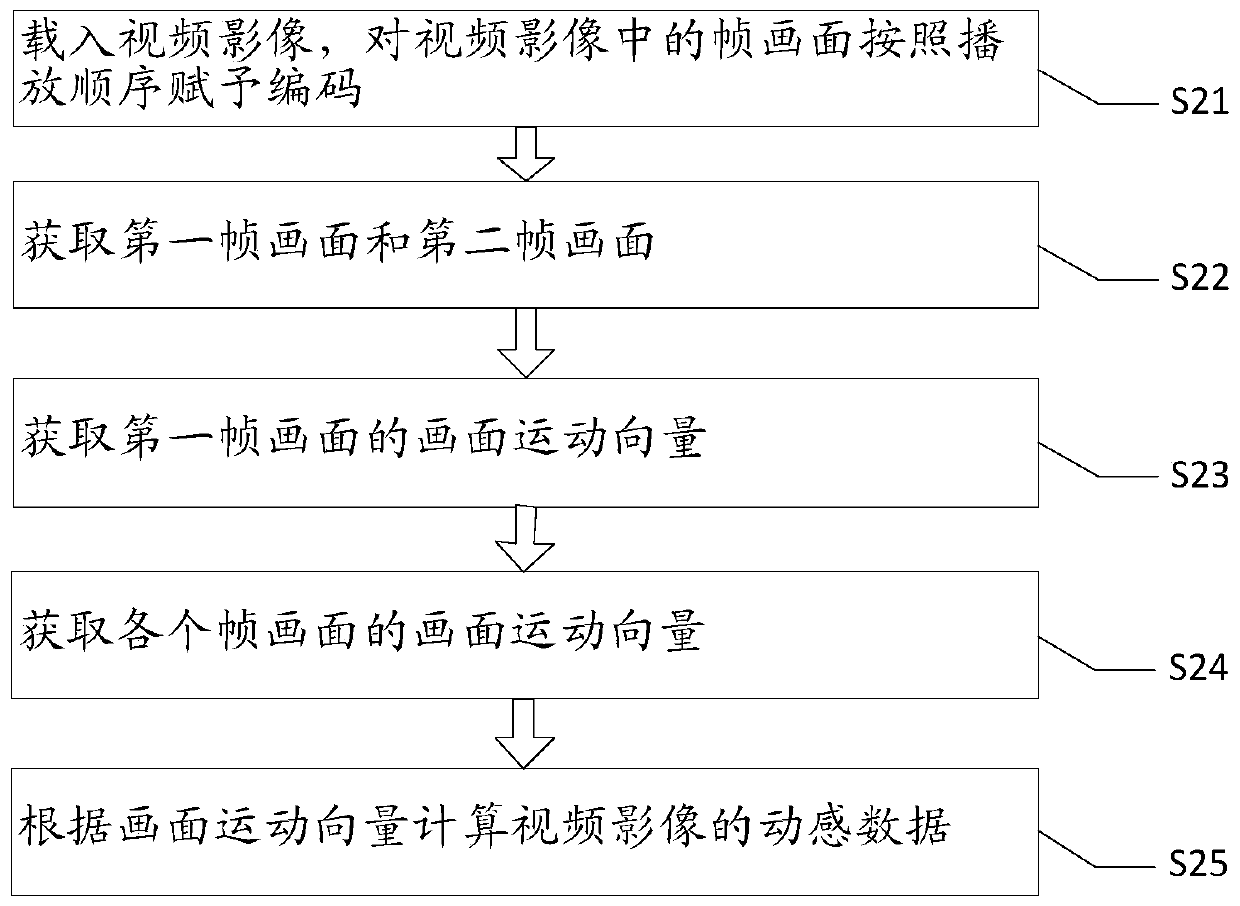

[0084] figure 2 It is a flow chart of the method for automatically generating dynamic data based on video motion estimation in embodiment 1, as figure 2 As described, the method for automatically generating dynamic data based on video motion estimation in this embodiment includes:

[0085] S21: Load the video image, and assign codes to the frames in the video image according to the playback order.

[0086] Specifically, the loaded video image can be any video image, including a complete movie video or a part of a movie video, it can be a black and white video or a color video, it can be a silent video, and the video image is composed of frames. For example, there are 24 frame pictures per second, any frame picture has a picture motion vector, and the shape of each frame picture is the same.

[0087] S22: Acquiring the first frame picture and the second frame picture.

[0088] Specifically, any frame in the video image is acquired as the first frame, and any frame in the v...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com