Camera pose estimation method oriented to RGBD (Red, Green and Blue-Depth) data stream

A pose estimation and data flow technology, which is applied in image data processing, computing, instruments, etc., can solve the problems of time-consuming camera pose estimation method and meet the requirements of real-time performance, so as to reduce the amount of calculation and improve the accuracy. degree of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] Embodiments of the present invention will be described in detail with reference to the accompanying drawings.

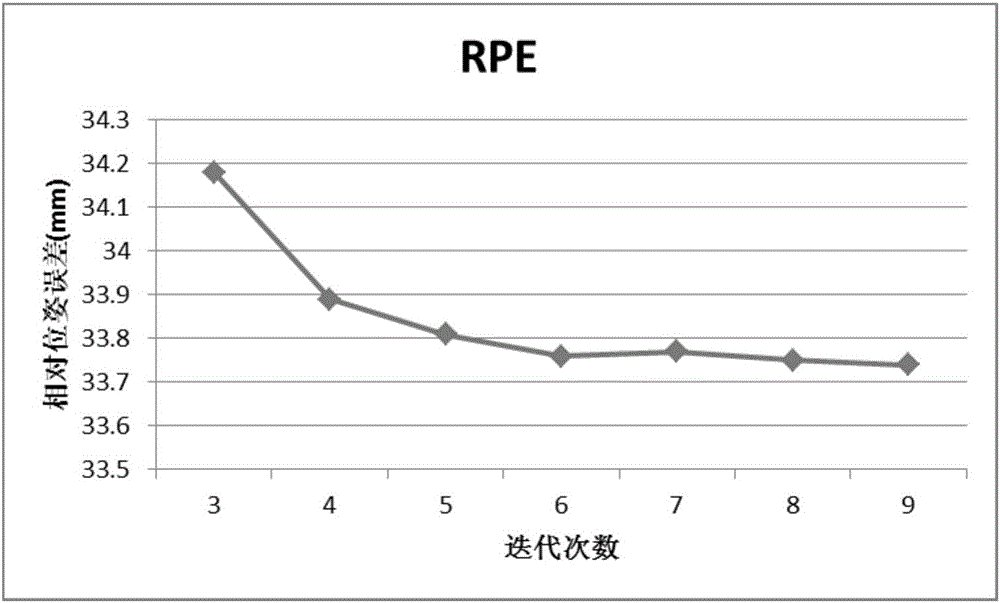

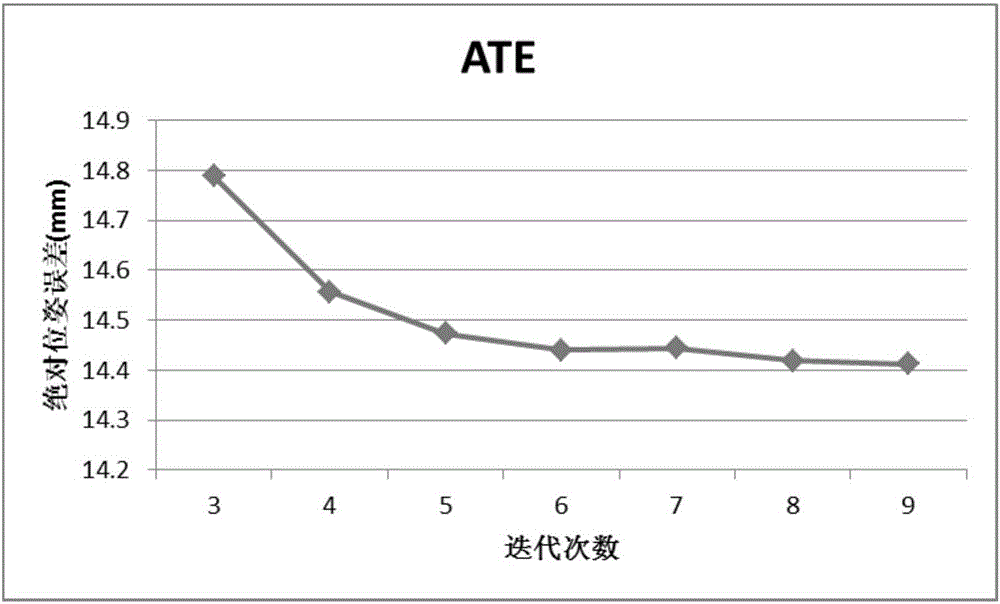

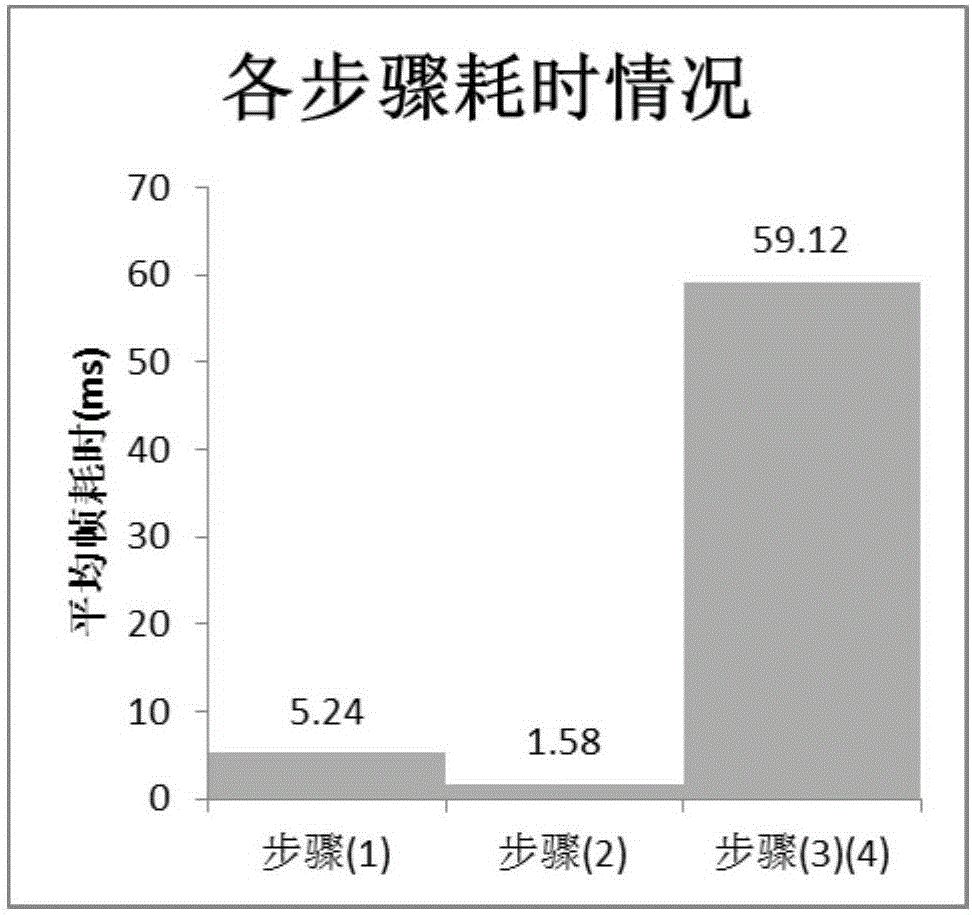

[0023] Such as Figure 4 As shown, the implementation process of the present invention is mainly divided into four steps: depth data preprocessing, building a three-dimensional point cloud map, corresponding point matching, and optimizing distance errors.

[0024] Step 1. In-depth data preprocessing

[0025] Its main steps are:

[0026] (1) For the depth data in the given input RGBD (color + depth) data stream, set the threshold w according to the error range of the depth camera min ,w max , the depth value is at w min with w max The points between are regarded as credible values, and only the depth data I within the threshold range are kept.

[0027] (2) Perform fast bilateral filtering on each pixel of the depth data, as follows:

[0028]

[0029] where p j for pixel p i The pixels in the neighborhood of , s is the number of effective pixels in t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com