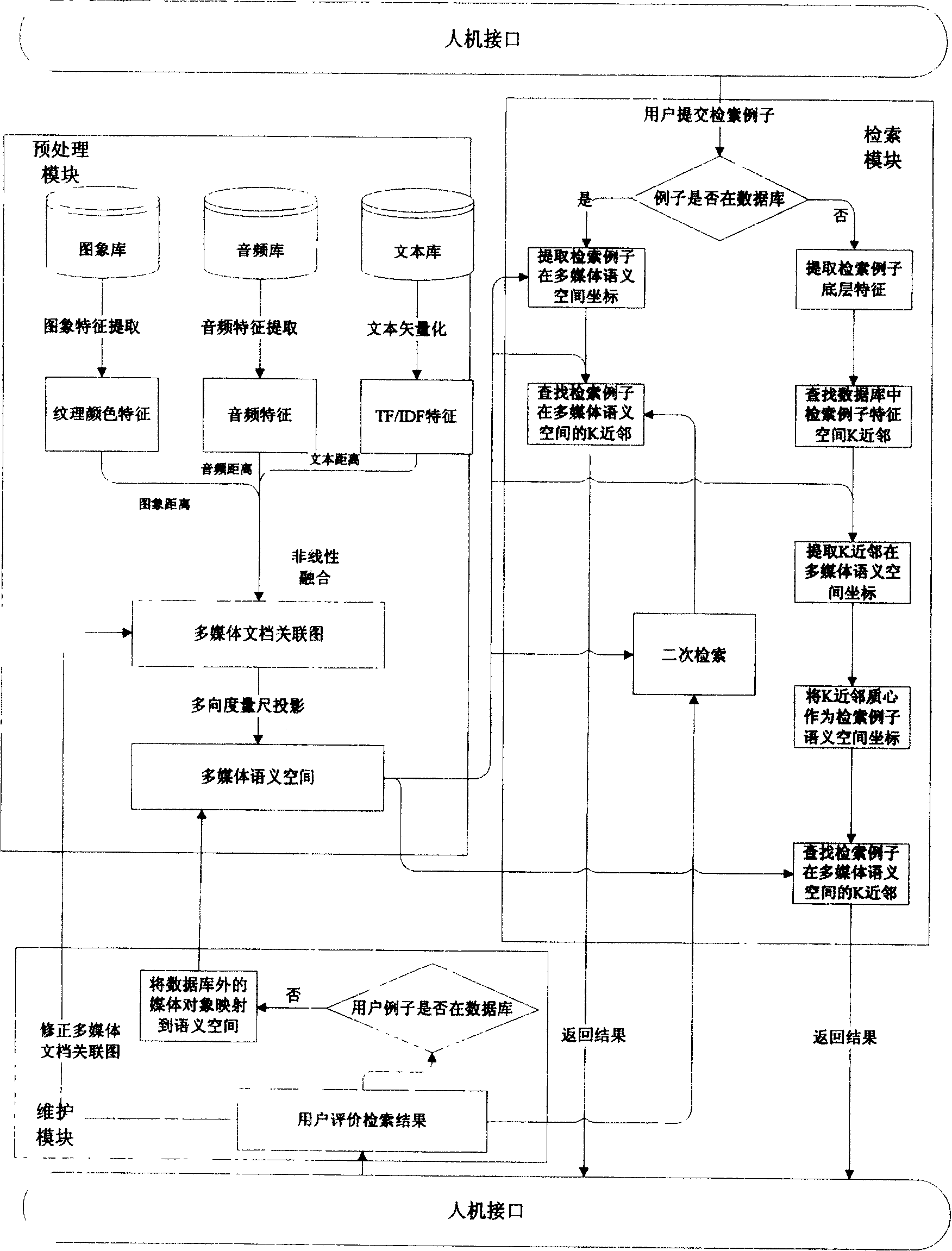

Transmedia search method based on multi-mode information convergence analysis

A multimedia and multi-modal technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve problems such as retrieving audio, unable to retrieve images, and unsatisfactory accuracy, achieving high accuracy, powerful effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

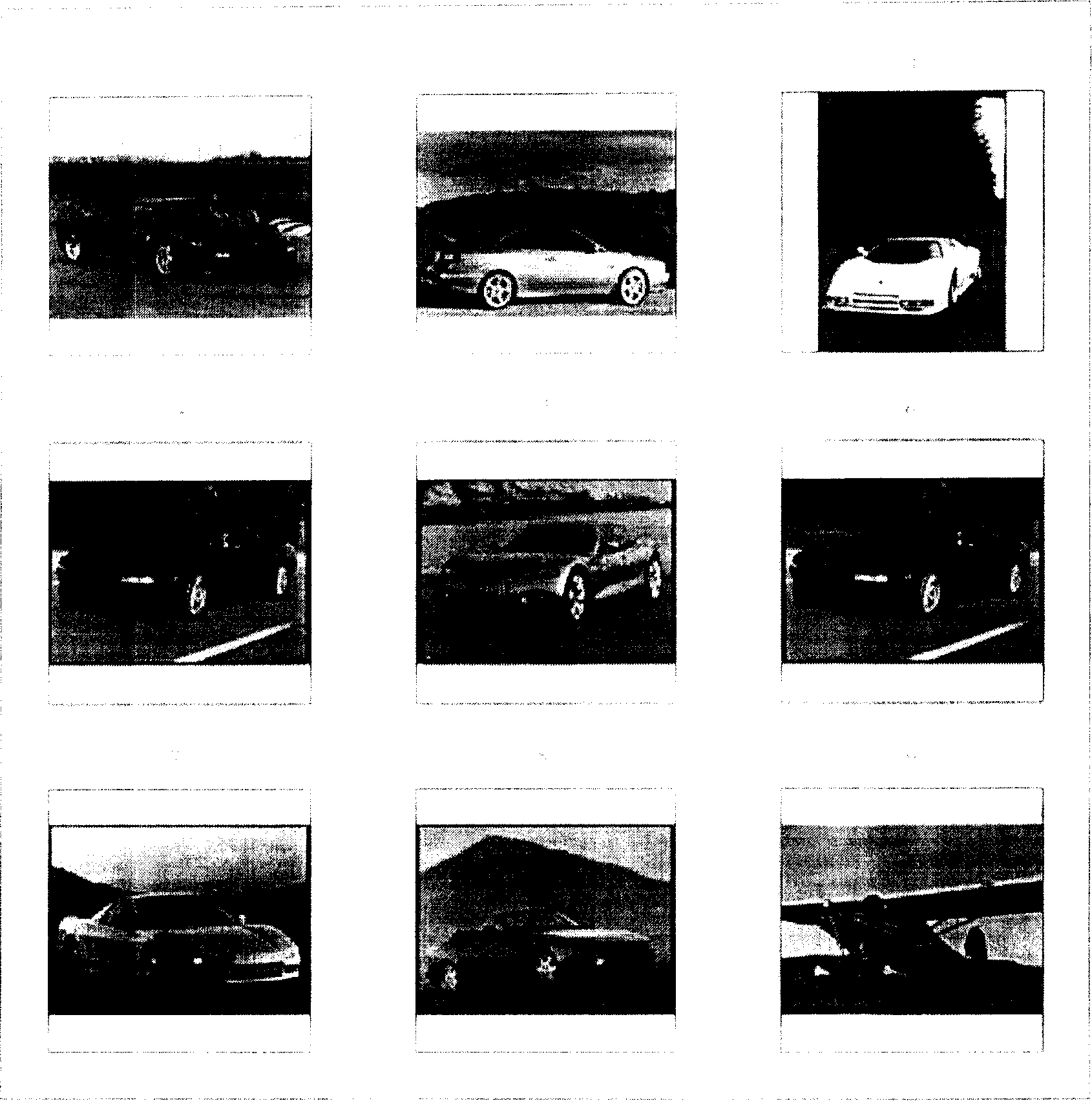

[0046] Assume that there are 900 multimedia documents consisting of 900 images, 300 sound clips and 700 texts. First calculate and extract the underlying features of all images, including RGB color histogram, color aggregation vector and Tamura texture features, and then calculate the pairwise distance between all images; for sound clips, extract root mean square, zero-crossing rate, cut-off frequency and The four features of the centroid, and then use the dynamic time stretching (DTW) algorithm to calculate the distance between all sound objects; for text, use TF / IDF vectorization to calculate the distance between two text objects. After completing the calculation of the media object distance, it is necessary to normalize the image distance, text distance and sound distance respectively, and then for any multimedia document A and B, first find the distance between the text, sound and image objects belonging to the two multimedia documents respectively , and then calculate the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com