Data fusion method and data fusion system for laser radar and depth camera

A technology of laser radar and depth camera, which is applied in the field of data fusion, can solve the problems of low precision, narrow field of view of depth camera, unfavorable application of mobile device positioning and obstacle avoidance, etc., and achieve the effect of expanding the detection range and improving the accuracy of information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

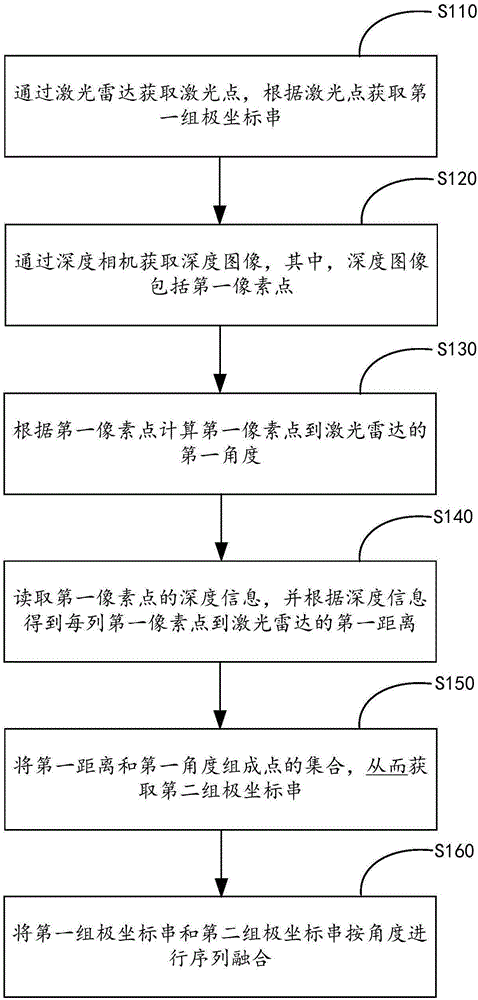

[0053] figure 1 It is a flow chart of the data fusion method of the laser radar and the depth camera provided by Embodiment 1 of the present invention.

[0054] refer to figure 1 , the method includes:

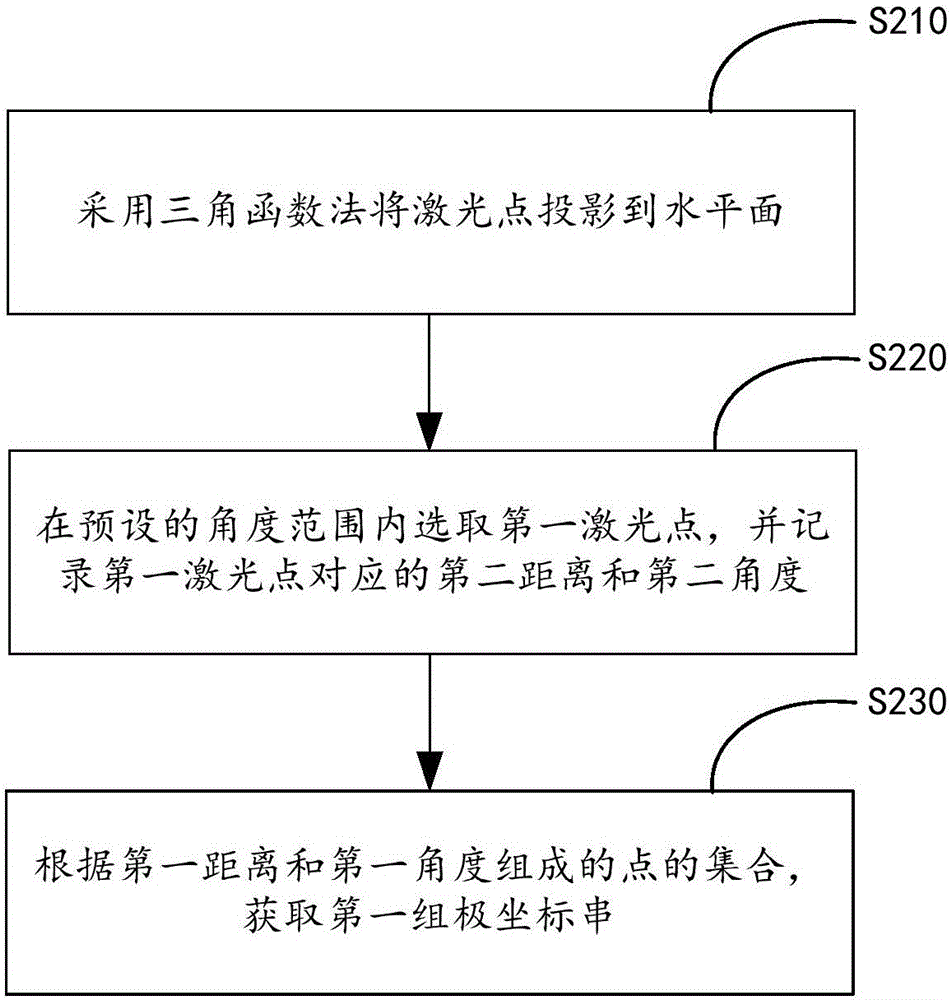

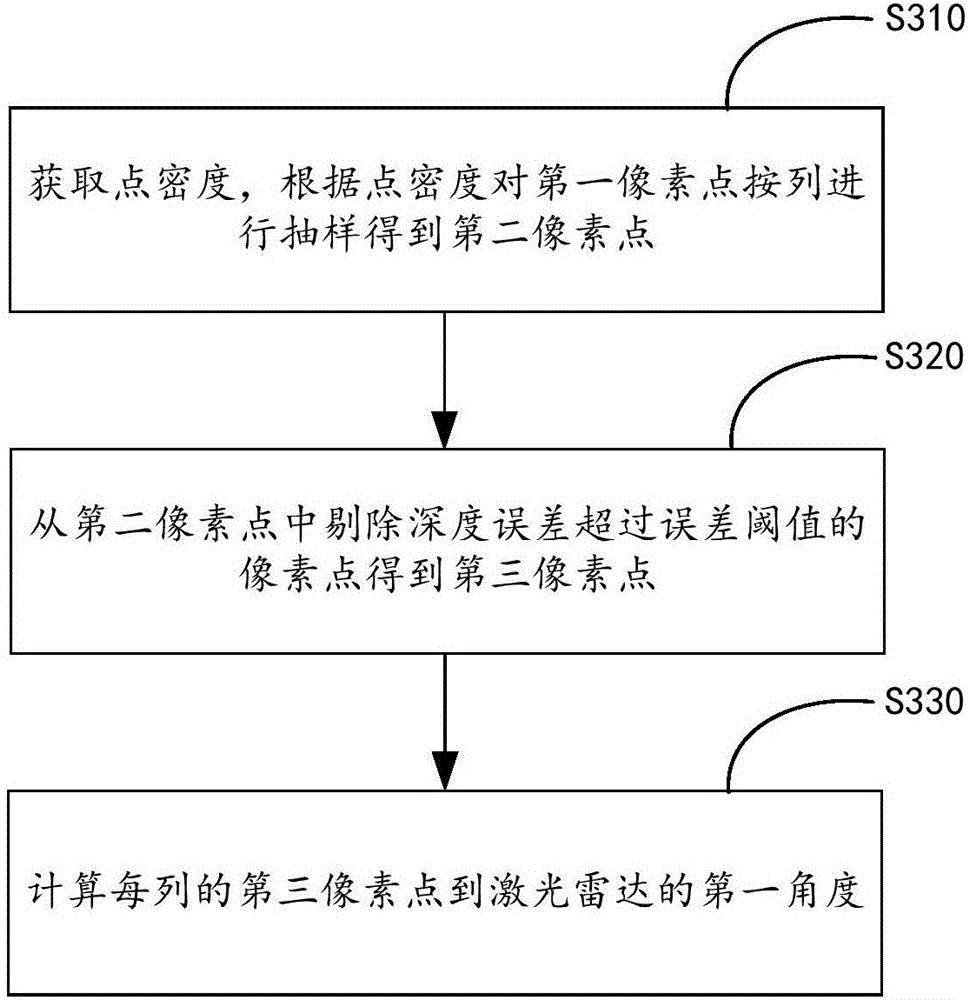

[0055] Step S110, obtaining the laser point through the laser radar, and obtaining the first set of polar coordinate strings according to the laser point;

[0056] Specifically, when the lidar scans by rotating a single point, it can obtain a series of points on a horizontal plane or multiple horizontal planes around 360°; when it uses a static scanning method, it can obtain a series of points on one horizontal plane or multiple horizontal planes , the angle cannot reach 360°, but the accuracy is higher; when the laser radar is installed, it must be kept parallel to the horizontal plane to ensure that the acquired laser points are parallel to the horizontal plane; the first set of polar coordinate strings is represented by Q 1 , Q 2 , Q 3 ,...Q n Represents; in addition,...

Embodiment 2

[0094] Figure 5 It is a schematic diagram of the data fusion system of the laser radar and the depth camera provided by the second embodiment of the present invention.

[0095] refer to Figure 5 , the data fusion system of laser radar and depth camera, comprising: laser radar 100, depth camera 300 and data fusion module 200;

[0096] The laser radar 100 is used to obtain laser points, and obtain the first set of polar coordinate strings according to the laser points;

[0097] A depth camera 300, configured to acquire a depth image, wherein the depth image includes a first pixel point, calculate a first angle from the first pixel point to the laser radar 100 according to the first pixel point, read the depth information of the first pixel point, and According to the depth information, the first distance from the first pixel point in each column to the laser radar 100 is obtained, and the first distance and the first angle are formed into a set of points, thereby obtaining a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com