Particle filter based multi-frame reference motion estimation method

A motion estimation and particle filtering technology, applied in the field of multi-frame reference motion estimation based on particle filtering, can solve the problem of insufficient search accuracy and high

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

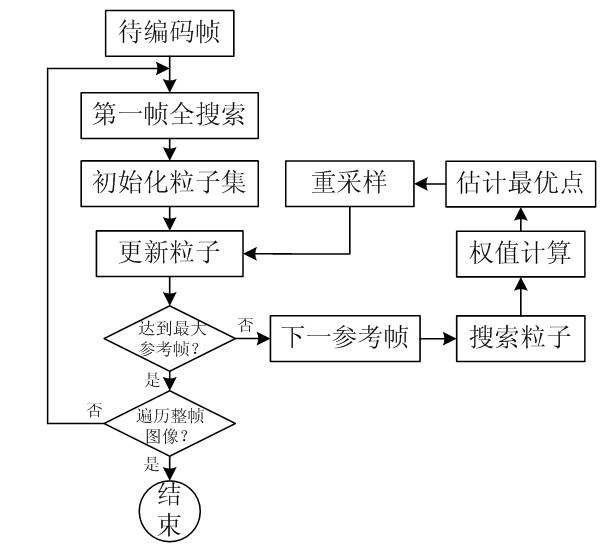

[0043] Such as figure 1 As shown, the following examples illustrate the multi-frame reference motion estimation method based on particle filter of the present invention, and its steps are as follows:

[0044] (1) In the image sequence, the z coded frames nearest to the frame to be coded are used as reference frames, where 1≤z≤16.

[0045] In this embodiment, taking the fth frame to be encoded as an example, the value of z is 10. Take the f-1th frame as the current reference frame, and use the full search method in the f-1th frame to perform a motion search on one of the blocks to be coded in the f-th frame (taking the g-th block as an example), and obtain the f-th The encoding cost of all points in the search window of -1 frame, where the minimum encoding cost is C min ; Select K points with the smallest encoding cost to form a particle set, where 10≤K≤100. In this embodiment, the value of K is 10. choose C min The corresponding point is used as the current best particle ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com