Psychometrics play an important role in mental health, self-understanding, and personal development.

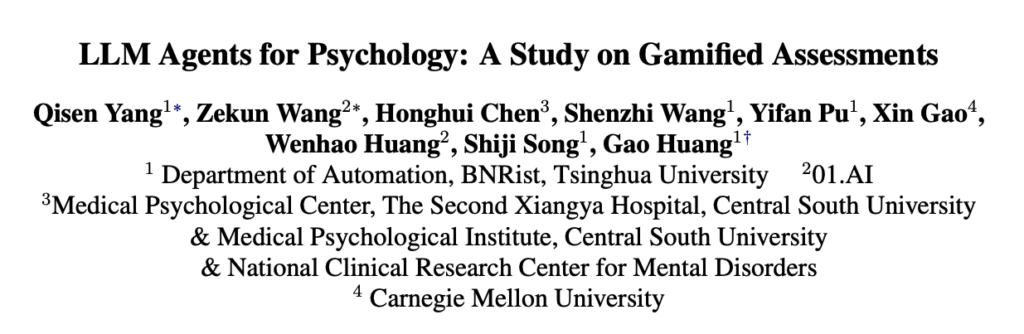

The traditional psychometric paradigm is dominated by self-report questionnaires, which are often measured by participants recalling their daily behavioral patterns or emotional states.

Although such a measurement method is efficient and convenient, it may induce resistance among participants and reduce their willingness to be measured.

With the development of large language models (LLMs) , many studies have found that LLMs can exhibit stable personality traits, imitate subtle human emotions and cognitive patterns, and can also assist various social science simulation experiments, providing a basis for educational psychology. , social psychology, cultural psychology, clinical psychology, psychological counseling and many other psychological research fields, providing new research ideas.

Recently, a research team from Tsinghua University proposed an innovative psychological measurement paradigm based on a multi-agent system based on a large language model .

Different from traditional self-report questionnaires, this study generates an interactive narrative game customized for each participant , and users can customize the type and theme of the game .

As the game plot develops, participants need to choose different decision-making behaviors from a first-person perspective to determine the direction of the plot. By analyzing participants’ choices during key plot points in the game, the study was able to measure their corresponding psychological traits.

△ Comparison of the psychometric paradigm of self-report questionnaires (left) and the psychometric paradigm of interactive narrative games (right)

The contribution of this research is mainly reflected in three aspects:

- A new psychological measurement paradigm is proposed to transform traditional questionnaires into game-based interactive measurement; on the basis of ensuring the reliability and validity of psychological measurement, it enhances participants’ sense of immersion and improves the experience of being tested.

- In order to achieve gamification measurement, this study proposes a multi-agent interaction framework based on a large language model, called PsychoGAT (Psychological Game Agents) , which ensures the generalization of psychological test scenarios and is consistent with the results measured under different game settings. robustness.

- Through automated simulation assessment and real-person assessment, this study has demonstrated significant superiority in psychometric statistical indicators and user experience indicators on tasks such as MBTI personality test, PHQ-9 depression measurement, and cognitive thinking trap test. sex.

Next, let’s take a look at the details of the study.

What does PsychoGAT look like?

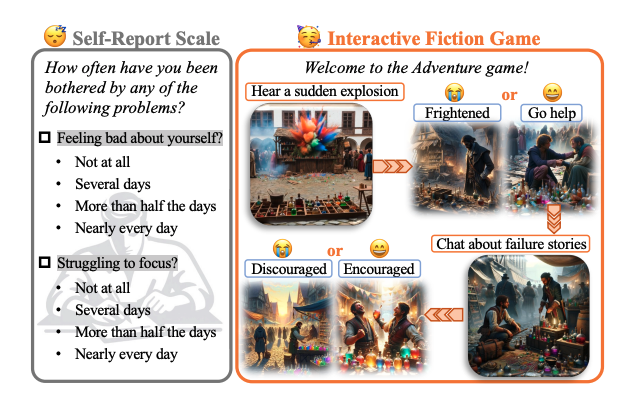

△ Schematic diagram of PsychoGAT framework

Agent interaction process:

Given a traditional psychological test questionnaire, participants customize the game type and theme, and then the game designer (Game Designer) agent gives an overall game design outline.

Then, the game controller (Game Controller) agent generates a specific game plot. During this process, the critic (Critic) agent conducts multiple rounds of review and optimization of the content generated by the administrator; the optimized game plot will be It is shown to the participants, and after the participants make corresponding choices, the administrator promotes the development of the plot based on this choice, and follows this interactive process cycle.

Detailed description of the functions of each agent:

- Game Designer : Use CoT technology to generate an outline of a first-person narrative game, and ensure that the scenarios included in this storyline can enable participants to exhibit the currently measured psychological traits.

At the same time, the standard psychological self-report questionnaire is adapted according to the current game storyline, making the integration of the two more natural and smooth.

- Game Controller : Instantiate the adapted questionnaire one by one according to the story line of the game, turn it into plot nodes of the story, and provide possible options for participants to choose.

At the same time, the game administrator returns the participants’ choices to the game environment and controls the plot trend of the game based on the participants’ choices. In order to achieve the continuity of the game plot, the administrator agent adopts a “memory update” mechanism.

- Critic : Aimed at reviewing and optimizing content generated by game administrators.

Mainly aimed at the following three issues:

1) Optimize consistency : As the game plot progresses, the long text problem will become more serious, making the “memory update” mechanism unable to fully guarantee plot consistency.

2) Ensure unbiasedness : The choices of participants will affect the development of the game plot, but before the participants make a choice, the administrator should not preset the direction of the plot, even if the participants showed obvious tendencies in the previous choices. sex.

3) Correct missing items : Conduct a detailed review of the game plot generated by the administrator to check whether it has basic game immersion.

Experiments and results

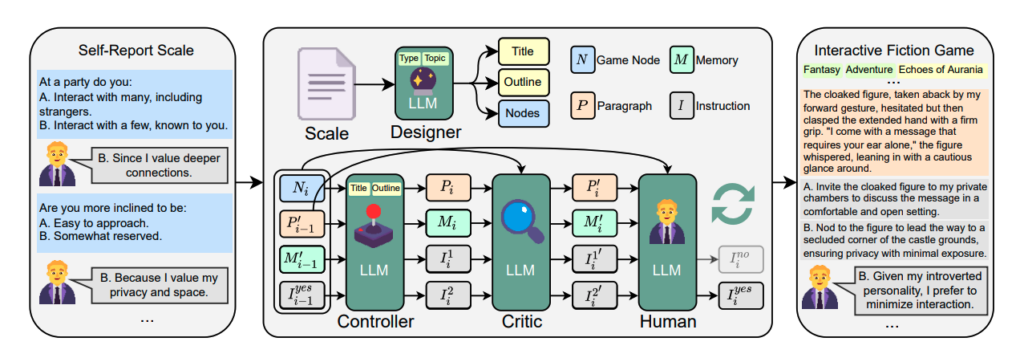

△ Comparison of three common psychological measurement paradigms: traditional questionnaires, psychologist interviews, and the gamified assessment proposed in this study.

What is mentioned here are all automated measurements based on AI. In particular, psychologist interviews refer to the current interview paradigm that is combined with large language models and in which large language models play the role of psychologists.

During the experimental phase, the researchers selected three common psychological measurement tasks: extraversion in the MBTI personality test, PHQ-9 depression detection, and cognitive distortion detection in the early stages of CBT therapy.

First, the researchers compared it with mature traditional psychological questionnaires to test the psychometric reliability and validity of the study. Furthermore, it is compared with three other automated measurement methods to examine the user experience of different measurement methods.

The researchers first used GPT-4 to simulate the subject and recorded the measurement process and measurement results using different measurement methods. These measurement records were used to calculate subsequent psychometric reliability and validity indicators, as well as user experience indicators.

There are two evaluation indicators: reliability and validity indicators and user experience indicators.

- Reliability and validity indicators : In psychometrics, to evaluate whether a measurement tool is scientific, it is generally verified from two dimensions: reliability and validity .

In this study, two statistical measures were chosen as indicators of reliability to measure internal consistency: Cronbach’s Alpha and Guttman’s Lambda 6; Pearson coefficient was used as an indicator of validity to measure convergent validity and discriminant validity respectively. Discrim inant vali dity .

- User experience indicators , manually evaluated indicators include:

1) Coherence (CH) : whether the content logic is coherent;

2) Interactivity (IA): Whether there is an appropriate and unbiased response to the user’s choice;

3) Interest (Interest, INT) : whether the measurement process is interesting;

4) Immersion (IM): Whether the measurement process allows participants to be immersed in the process;

5) Satisfaction (Satisfaction, ST) : Satisfaction with the overall measurement process.

Below are the experimental results.

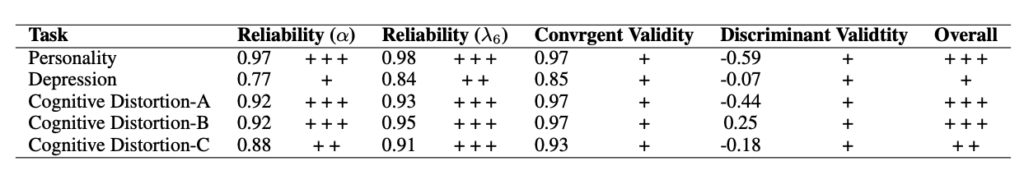

First, the researchers tested whether the PsychoGAT proposed in this study could be used as a qualified psychological measurement tool. The results are shown in the table below.

△ Reliability and validity test results of PsychoGAT (+passed, ++good, +++excellent)

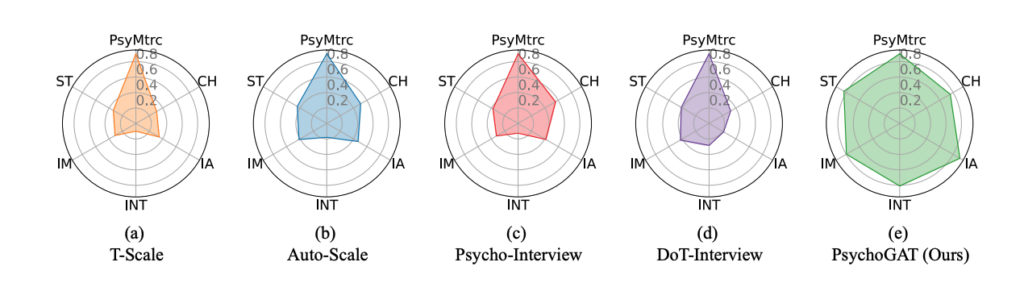

Further, the researchers compared the user experience of different psychometric paradigms. The gamified assessment proposed in this study was significantly better than other methods in terms of interactivity, fun and immersion:

△ The user experience results of PsychoGAT and the corresponding results of other comparison methods

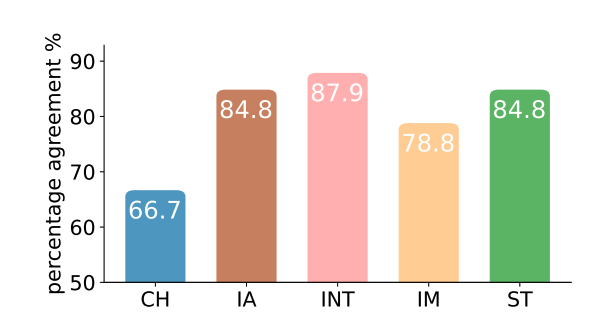

In order to ensure the validity of manual assessment, the researchers calculated the results of manual assessment, and the consistency of assessment in each indicator of PsychoGAT is better than that of other methods:

△ PsychoGAT’s user experience index is consistent in manual evaluation due to the comparison method.

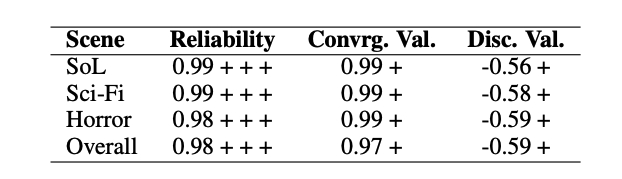

In order to further analyze PsychoGAT, the researchers first tested the reliability and validity of the gamification measurement in different game scenarios:

△ The robustness of PsychoGAT in measuring reliability and validity in different game scenarios

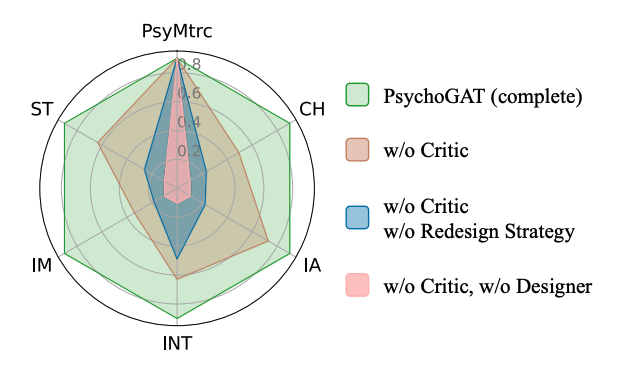

Next, the role of each agent in PsychoGAT was explored:

△ The role of different agents in PsychoGAT

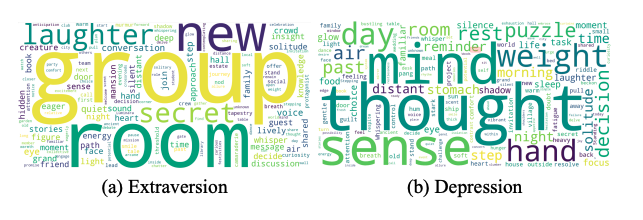

Finally, in order to visually present the game-generated content of PsychoGAT, the researchers used word clouds to visualize the extraversion test and depression test:

△ PsychoGAT generates visualizations of game scenarios for extraversion measurement and depression measurement.

The content of the extraversion test focuses mainly on social situations, while the depression test tends to focus on personal thoughts and emotions.

For more research details, please refer to the original paper.

Paper link: https://arxiv-org.libproxy1.nus.edu.sg/abs/2402.12326